Indexing ERC-20 Transfers on a Tanssi EVM Network¶

Introduction¶

SQD is a data network that allows rapid and cost-efficient retrieval of blockchain data from 100+ chains using SQD’s decentralized data lake and open-source SDK. In very simple terms, SQD can be thought of as an ETL (extract, transform, and load) tool with a GraphQL server included. It enables comprehensive filtering, pagination, and even full-text search capabilities.

SQD has native and full support for both EVM and Substrate data. SQD offers a Substrate Archive and Processor and an EVM Archive and Processor. The Substrate Archive and Processor can be used to index both Substrate and EVM data. This allows developers to extract on-chain data from any Tanssi-powered network and process EVM logs as well as Substrate entities (events, extrinsics, and storage items) in one single project and serve the resulting data with one single GraphQL endpoint. If you exclusively want to index EVM data, it is recommended to use the EVM Archive and Processor.

This tutorial is a step-by-step guide to building a Squid to index EVM data from start to finish. It's recommended that you follow along, taking each step described on your own, but you can also find a complete version of the Squid built in this tutorial in the tanssiSquid GitHub repository.

Check Prerequisites¶

To follow along with this tutorial, you'll need to have:

- Docker installed

- Docker Compose installed

- An empty Hardhat project. For step-by-step instructions, please refer to the Creating a Hardhat Project section of our Hardhat documentation page

Note

The examples in this guide are based on a MacOS or Ubuntu 20.04 environment. If you're using Windows, you'll need to adapt them accordingly.

Furthermore, please ensure that you have Node.js and a package manager (such as npm or yarn) installed. To learn how to install Node.js, please check their official documentation.

Also, make sure you've initialized a package.json file for ES6 modules. You can initialize a default package.json file using npm by running the following command npm init --yes.

Deploy an ERC-20 with Hardhat¶

Before we can index anything with SQD we need to make sure we have something to index! This section will walk through deploying an ERC-20 token to your Tanssi-powered network so you can get started indexing it. However, you can feel free to skip to Create a Squid Project if either of the two scenarios apply:

- You have already deployed an ERC-20 token to your network (and made several transfers)

- You would prefer to use an existing ERC-20 token deployed to the demo EVM network (of which there are already several transfer events)

If you'd like to use an existing ERC-20 token on the demo EVM network, you can use the below MyTok.sol contract. The hashes of the token transfers are provided as well to assist with any debugging.

In this section, we'll show you how to deploy an ERC-20 to your EVM network and we'll write a quick script to fire off a series of transfers that will be picked up by our SQD indexer. Ensure that you have initialized an empty Hardhat project via the instructions in the Creating a Hardhat Project section of our Hardhat documentation page.

Before we dive into creating our project, let's install a couple of dependencies that we'll need: the Hardhat Ethers plugin and OpenZeppelin contracts. The Hardhat Ethers plugin provides a convenient way to use the Ethers library to interact with the network. We'll use OpenZeppelin's base ERC-20 implementation to create an ERC-20. To install both of these dependencies, you can run:

npm install @nomicfoundation/hardhat-ethers ethers @openzeppelin/contracts

yarn add @nomicfoundation/hardhat-ethers ethers @openzeppelin/contracts

Now we can edit hardhat.config.js to include the following network and account configurations for our network. You can replace the demo EVM network values with the respective parameters for your own Tanssi-powered EVM network which can be found at apps.tanssi.network.

hardhat.config.js

// 1. Import the Ethers plugin required to interact with the contract

require('@nomicfoundation/hardhat-ethers');

// 2. Add your private key that is funded with tokens of your Tanssi-powered network

// This is for example purposes only - **never store your private keys in a JavaScript file**

const privateKey = 'INSERT_PRIVATE_KEY';

/** @type import('hardhat/config').HardhatUserConfig */

module.exports = {

// 3. Specify the Solidity version

solidity: '0.8.20',

networks: {

// 4. Add the network specification for your Tanssi EVM network

demo: {

url: 'https://services.tanssi-testnet.network/dancelight-2001/',

chainId: 5678, // Fill in the EVM ChainID for your Tanssi-powered network

accounts: [privateKey],

},

},

};

Remember

You should never store your private keys in a JavaScript or Python file. It is done in this tutorial for ease of demonstration only. You should always manage your private keys with a designated secret manager or similar service.

Create an ERC-20 Contract¶

For the purposes of this tutorial, we'll be creating a simple ERC-20 contract. We'll rely on OpenZeppelin's ERC-20 base implementation. We'll start by creating a file for the contract and naming it MyTok.sol:

mkdir -p contracts && touch contracts/MyTok.sol

Now we can edit the MyTok.sol file to include the following contract, which will mint an initial supply of MYTOKs and allow only the owner of the contract to mint additional tokens:

MyTok.sol

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.20;

import "@openzeppelin/contracts/token/ERC20/ERC20.sol";

import "@openzeppelin/contracts/access/Ownable.sol";

contract MyTok is ERC20, Ownable {

constructor() ERC20("MyToken", "MTK") Ownable(msg.sender) {

_mint(msg.sender, 50000 * 10 ** decimals());

}

function mint(address to, uint256 amount) public onlyOwner {

_mint(to, amount);

}

}

Deploy an ERC-20 Contract¶

Now that we have our contract set up, we can compile and deploy our contract.

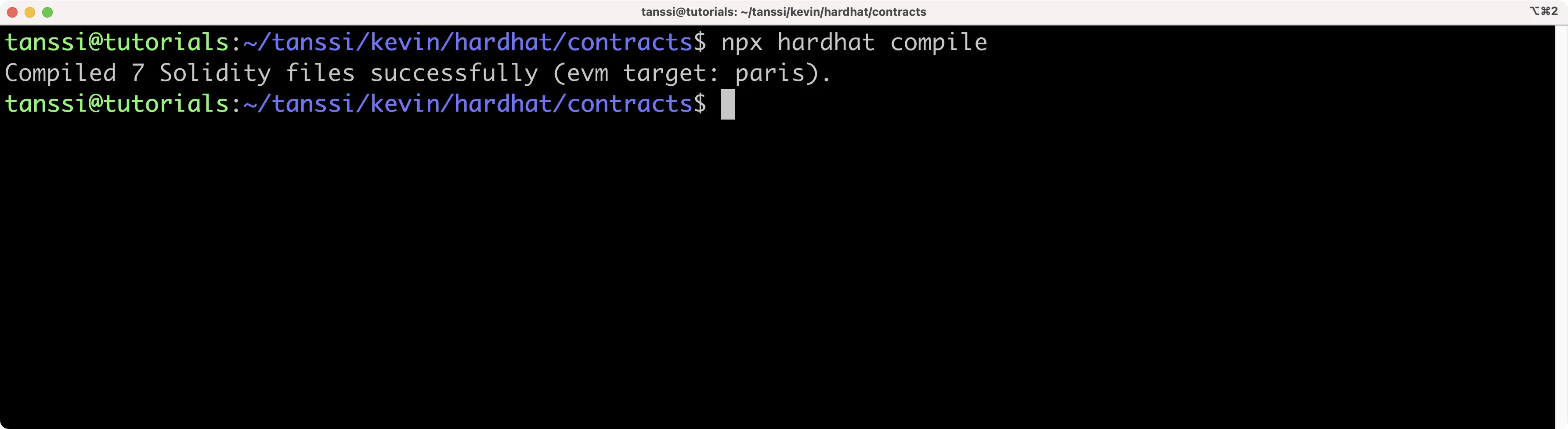

To compile the contract, you can run:

npx hardhat compile

This command will compile our contract and generate an artifacts directory containing the ABI of the contract.

To deploy our contract, we'll need to create a deployment script that deploys our ERC-20 contract and mints an initial supply of MYTOKs. We'll use Alith's account to deploy the contract, and we'll specify the initial supply to be 1000 MYTOK. The initial supply will be minted and sent to the contract owner, which is Alith.

Let's take the following steps to deploy our contract:

-

Create a directory and file for our script:

mkdir -p scripts && touch scripts/deploy.js -

In the

deploy.jsfile, go ahead and add the following script:deploy.js

// scripts/deploy.js const hre = require('hardhat'); require('@nomicfoundation/hardhat-ethers'); async function main() { // Get ERC-20 contract const MyTok = await hre.ethers.getContractFactory('MyTok'); // Define custom gas price and gas limit // This is a temporary stopgap solution to a bug const customGasPrice = 50000000000; // example for 50 gwei const customGasLimit = 5000000; // example gas limit // Deploy the contract providing a gas price and gas limit const myTok = await MyTok.deploy({ gasPrice: customGasPrice, gasLimit: customGasLimit, }); // Wait for the deployment await myTok.waitForDeployment(); console.log(`Contract deployed to ${myTok.target}`); } main().catch((error) => { console.error(error); process.exitCode = 1; }); -

Run the script using the

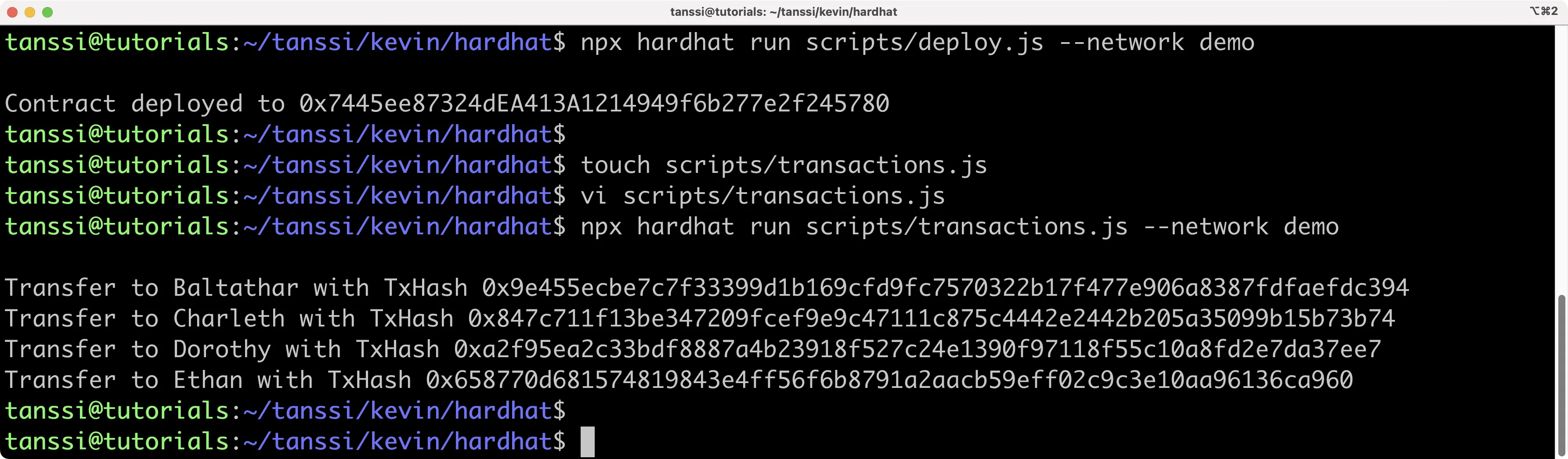

devnetwork configurations we set up in thehardhat.config.jsfile:npx hardhat run scripts/deploy.js --network demo

The address of the deployed contract should be printed to the terminal. Save the address, as we'll need it to interact with the contract in the following section.

Transfer ERC-20s¶

Since we'll be indexing Transfer events for our ERC-20, we'll need to send a few transactions that transfer some tokens from Alith's account to our other test accounts. We'll do this by creating a simple script that transfers 10 MYTOKs to Baltathar, Charleth, Dorothy, and Ethan. We'll take the following steps:

Create a new file script to send transactions:

touch scripts/transactions.js

In the transactions.js file, add the following script and insert the contract address of your deployed MyTok contract (output in the console in the prior step):

transactions.js

// We require the Hardhat Runtime Environment explicitly here. This is optional

// but useful for running the script in a standalone fashion through `node <script>`.

//

// You can also run a script with `npx hardhat run <script>`. If you do that, Hardhat

// will compile your contracts, add the Hardhat Runtime Environment's members to the

// global scope, and execute the script.

const hre = require('hardhat');

async function main() {

// Get Contract ABI

const MyTok = await hre.ethers.getContractFactory('MyTok');

// Define custom gas price and gas limit

// Gas price is typically specified in 'wei' and gas limit is just a number

// You can use Ethers.js utility functions to convert from gwei or ether if needed

const customGasPrice = 50000000000; // example for 50 gwei

const customGasLimit = 5000000; // example gas limit

// Plug ABI to address

const myTok = await MyTok.attach('INSERT_CONTRACT_ADDRESS');

const value = 100000000000000000n;

let tx;

// Transfer to Baltathar

tx = await myTok.transfer(

'0x3Cd0A705a2DC65e5b1E1205896BaA2be8A07c6e0',

value,

{

gasPrice: customGasPrice,

gasLimit: customGasLimit,

}

);

await tx.wait();

console.log(`Transfer to Baltathar with TxHash ${tx.hash}`);

// Transfer to Charleth

tx = await myTok.transfer(

'0x798d4Ba9baf0064Ec19eB4F0a1a45785ae9D6DFc',

value,

{

gasPrice: customGasPrice,

gasLimit: customGasLimit,

}

);

await tx.wait();

console.log(`Transfer to Charleth with TxHash ${tx.hash}`);

// Transfer to Dorothy

tx = await myTok.transfer(

'0x773539d4Ac0e786233D90A233654ccEE26a613D9',

value,

{

gasPrice: customGasPrice,

gasLimit: customGasLimit,

}

);

await tx.wait();

console.log(`Transfer to Dorothy with TxHash ${tx.hash}`);

// Transfer to Ethan

tx = await myTok.transfer(

'0xFf64d3F6efE2317EE2807d223a0Bdc4c0c49dfDB',

value,

{

gasPrice: customGasPrice,

gasLimit: customGasLimit,

}

);

await tx.wait();

console.log(`Transfer to Ethan with TxHash ${tx.hash}`);

}

// We recommend this pattern to be able to use async/await everywhere

// and properly handle errors.

main().catch((error) => {

console.error(error);

process.exitCode = 1;

});

Run the script to send the transactions:

npx hardhat run scripts/transactions.js --network demo

As each transaction is sent, you'll see a log printed to the terminal.

Now we can move on to creating our Squid to index the data on our local development node.

Create a Squid Project¶

Now we're going to create our Subquid project. First, we'll need to install the Squid CLI:

npm i -g @subsquid/cli@latest

To verify successful installation, you can run:

sqd --version

Now we'll be able to use the sqd command to interact with our Squid project. To create our project, we're going to use the --template (-t) flag, which will create a project from a template. We'll be using the EVM Squid template, which is a starter project for indexing EVM chains.

You can run the following command to create an EVM Squid named tanssi-squid:

sqd init tanssi-squid --template evm

This will create a Squid with all of the necessary dependencies. You can go ahead and install the dependencies:

cd tanssi-squid && npm ci

Now that we have a starting point for our project, we'll need to configure our project to index ERC-20 Transfer events taking place on our Tanssi network.

Set Up the Indexer for ERC-20 Transfers¶

In order to index ERC-20 transfers, we'll need to take a series of actions:

- Define the database schema and generate the entity classes

- Use the

ERC20contract's ABI to generate TypeScript interface classes - Configure the processor by specifying exactly what data to ingest

- Transform the data and insert it into a TypeORM database in

main.ts - Run the indexer and query the squid

As mentioned, we'll first need to define the database schema for the transfer data. To do so, we'll edit the schema.graphql file, which is located in the root directory, and create a Transfer entity and Account entity. You can copy and paste the below schema, ensuring that any existing schema is first removed.

schema.graphql

type Account @entity {

"Account address"

id: ID!

transfersFrom: [Transfer!] @derivedFrom(field: "from")

transfersTo: [Transfer!] @derivedFrom(field: "to")

}

type Transfer @entity {

id: ID!

blockNumber: Int!

timestamp: DateTime!

txHash: String!

from: Account!

to: Account!

amount: BigInt!

}

Now we can generate the entity classes from the schema, which we'll use when we process the transfer data. This will create new classes for each entity in the src/model/generated directory.

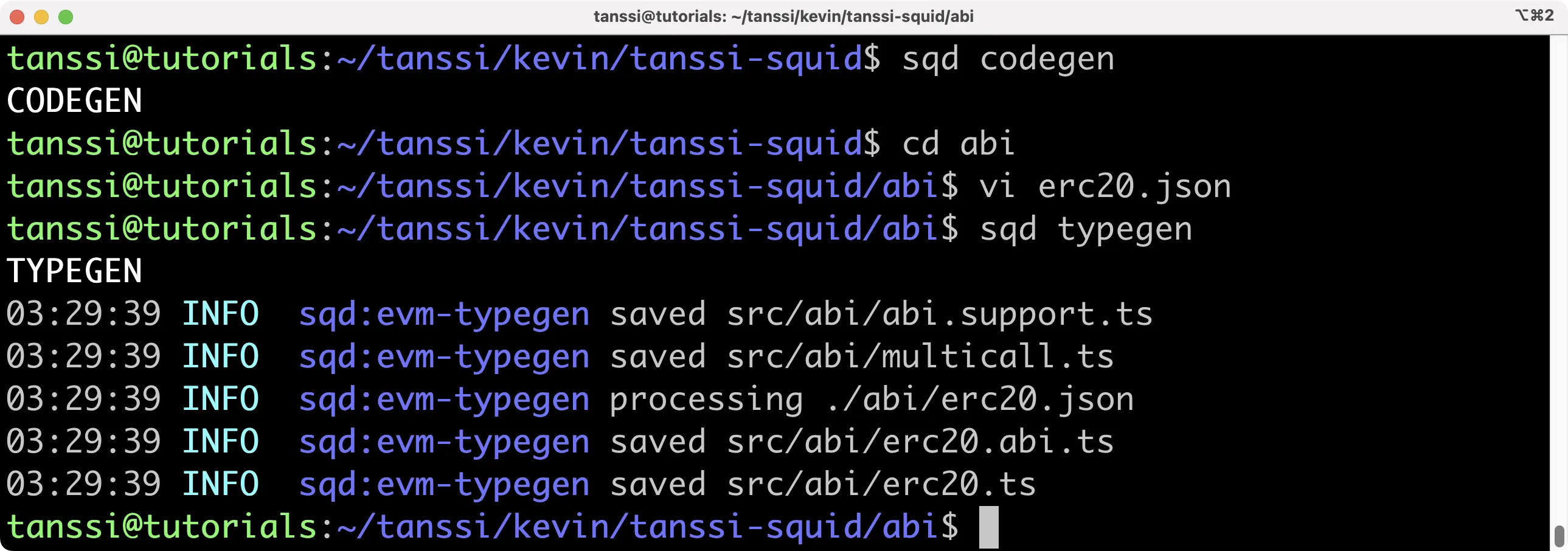

sqd codegen

In the next step, we'll use the ERC-20 ABI to automatically generate TypeScript interface classes. Below is a generic ERC-20 standard ABI. Copy and paste it into a file named erc20.json in the abi folder at the root level of the project.

ERC-20 ABI

[

{

"constant": true,

"inputs": [],

"name": "name",

"outputs": [

{

"name": "",

"type": "string"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"constant": false,

"inputs": [

{

"name": "_spender",

"type": "address"

},

{

"name": "_value",

"type": "uint256"

}

],

"name": "approve",

"outputs": [

{

"name": "",

"type": "bool"

}

],

"payable": false,

"stateMutability": "nonpayable",

"type": "function"

},

{

"constant": true,

"inputs": [],

"name": "totalSupply",

"outputs": [

{

"name": "",

"type": "uint256"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"constant": false,

"inputs": [

{

"name": "_from",

"type": "address"

},

{

"name": "_to",

"type": "address"

},

{

"name": "_value",

"type": "uint256"

}

],

"name": "transferFrom",

"outputs": [

{

"name": "",

"type": "bool"

}

],

"payable": false,

"stateMutability": "nonpayable",

"type": "function"

},

{

"constant": true,

"inputs": [],

"name": "decimals",

"outputs": [

{

"name": "",

"type": "uint8"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"constant": true,

"inputs": [

{

"name": "_owner",

"type": "address"

}

],

"name": "balanceOf",

"outputs": [

{

"name": "balance",

"type": "uint256"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"constant": true,

"inputs": [],

"name": "symbol",

"outputs": [

{

"name": "",

"type": "string"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"constant": false,

"inputs": [

{

"name": "_to",

"type": "address"

},

{

"name": "_value",

"type": "uint256"

}

],

"name": "transfer",

"outputs": [

{

"name": "",

"type": "bool"

}

],

"payable": false,

"stateMutability": "nonpayable",

"type": "function"

},

{

"constant": true,

"inputs": [

{

"name": "_owner",

"type": "address"

},

{

"name": "_spender",

"type": "address"

}

],

"name": "allowance",

"outputs": [

{

"name": "",

"type": "uint256"

}

],

"payable": false,

"stateMutability": "view",

"type": "function"

},

{

"payable": true,

"stateMutability": "payable",

"type": "fallback"

},

{

"anonymous": false,

"inputs": [

{

"indexed": true,

"name": "owner",

"type": "address"

},

{

"indexed": true,

"name": "spender",

"type": "address"

},

{

"indexed": false,

"name": "value",

"type": "uint256"

}

],

"name": "Approval",

"type": "event"

},

{

"anonymous": false,

"inputs": [

{

"indexed": true,

"name": "from",

"type": "address"

},

{

"indexed": true,

"name": "to",

"type": "address"

},

{

"indexed": false,

"name": "value",

"type": "uint256"

}

],

"name": "Transfer",

"type": "event"

}

]

Next, we can use our contract's ABI to generate TypeScript interface classes. We can do this by running:

sqd typegen

This will generate the related TypeScript interface classes in the src/abi/erc20.ts file. For this tutorial, we'll be accessing the events specifically.

Configure the Processor¶

The processor.ts file tells SQD exactly what data you'd like to ingest. Transforming that data into the exact desired format will take place at a later step. In processor.ts, we'll need to indicate a data source, a contract address, the event(s) to index, and a block range.

Open up the src folder and head to the processor.ts file. First, we need to tell the SQD processor which contract we're interested in. Create a constant for the address in the following manner:

export const CONTRACT_ADDRESS = 'INSERT_CONTRACT_ADDRESS'.toLowerCase();

The .toLowerCase() is critical because the SQD processor is case-sensitive, and some block explorers format contract addresses with capitalization. Next, you'll see the line export const processor = new EvmBatchProcessor(), followed by .setDataSource. We'll need to make a few changes here. SQD has available archives for many chains that can speed up the data retrieval process, but it's unlikely your network has a hosted archive already. But not to worry, SQD can easily get the data it needs via your network's RPC URL. Go ahead and comment out or delete the archive line. Once done, your code should look similar to the below:

.setDataSource({

chain: {

url: assertNotNull(

'https://services.tanssi-testnet.network/dancelight-2001/'

),

rateLimit: 300,

},

})

The Squid template comes with a variable for your RPC URL defined in your .env file. You can replace that with the RPC URL for your network. For demonstration purposes, the RPC URL for the demo EVM network is hardcoded directly, as shown above. If you're setting the RPC URL in your .env, the respective line will look like this:

RPC_ENDPOINT=https://services.tanssi-testnet.network/dancelight-2001/

Now, let's define the event that we want to index by adding the following:

.addLog({

address: [contractAddress],

topic0: [erc20.events.Transfer.topic],

transaction: true,

})

The Transfer event is defined in erc20.ts, which was auto-generated when sqd typegen was run. The import import * as erc20 from './abi/erc20' is already included as part of the Squid EVM template.

Block range is an important value to modify to narrow the scope of the blocks you're indexing. For example, if you launched your ERC-20 at block 650000, there is no need to query the chain before that block for transfer events. Setting an accurate block range will improve the performance of your indexer. You can set the earliest block to begin indexing in the following manner:

.setBlockRange({from: 632400,})

The chosen start block here corresponds to the relevant block to begin indexing on the demo EVM network, but you should change it to one relevant to your Tanssi-powered network and indexer project.

Change the setFields section to specify the following data for our processor to ingest:

.setFields({

log: {

topics: true,

data: true,

},

transaction: {

hash: true,

},

})

We also need to add the following imports to our processor.ts file:

import { Store } from '@subsquid/typeorm-store';

import * as erc20 from './abi/erc20';

Once you've completed the prior steps, your processor.ts file should look similar to this:

processor.ts

import { assertNotNull } from '@subsquid/util-internal';

import {

BlockHeader,

DataHandlerContext,

EvmBatchProcessor,

EvmBatchProcessorFields,

Log as _Log,

Transaction as _Transaction,

} from '@subsquid/evm-processor';

import { Store } from '@subsquid/typeorm-store';

import * as erc20 from './abi/erc20';

// Here you'll need to import the contract

export const contractAddress = 'INSERT_CONTRACT_ADDRESS'.toLowerCase();

export const processor = new EvmBatchProcessor()

.setDataSource({

chain: {

url: assertNotNull(

'https://services.tanssi-testnet.network/dancelight-2001'

),

rateLimit: 300,

},

})

.setFinalityConfirmation(10)

.setFields({

log: {

topics: true,

data: true,

},

transaction: {

hash: true,

},

})

.addLog({

address: [contractAddress],

topic0: [erc20.events.Transfer.topic],

transaction: true,

})

.setBlockRange({

from: INSERT_START_BLOCK, // Note the lack of quotes here

});

export type Fields = EvmBatchProcessorFields<typeof processor>;

export type Block = BlockHeader<Fields>;

export type Log = _Log<Fields>;

export type Transaction = _Transaction<Fields>;

Transform and Save the Data¶

While processor.ts determines the data being consumed, main.ts determines the bulk of actions related to processing and transforming that data. In the simplest terms, we are processing the data that was ingested via the SQD processor and inserting the desired pieces into a TypeORM database. For more detailed information on how SQD works, be sure to check out the SQD docs on Developing a Squid.

Our main.ts file is going to scan through each processed block for the Transfer event and decode the transfer details, including the sender, receiver, and amount. The script also fetches account details for involved addresses and creates transfer objects with the extracted data. The script then inserts these records into a TypeORM database, enabling them to be easily queried. Let's break down the code that comprises main.ts in order:

- The job of

main.tsis to run the processor and refine the collected data. Inprocessor.run, the processor will iterate through all selected blocks and look forTransferevent logs. Whenever it finds aTransferevent, it's going to store it in an array of transfer events where it awaits further processing - The

transferEventinterface is the type of structure that stores the data extracted from the event logs getTransferis a helper function that extracts and decodes ERC-20Transferevent data from a log entry. It constructs and returns aTransferEventobject, which includes details such as the transaction ID, block number, sender and receiver addresses, and the amount transferred.getTransferis called at the time of storing the relevant transfer events into the array of transfersprocessTransfersenriches the transfer data and then inserts these records into a TypeORM database using thectx.storemethods. The account model, while not strictly necessary, allows us to introduce another entity in the schema to demonstrate working with multiple entities in your SquidgetAccountis a helper function that manages the retrieval and creation of account objects. Given an account ID and a map of existing accounts, it returns the corresponding account object. If the account doesn't exist in the map, it creates a new one, adds it to the map, and then returns it

We'll demo a sample query in a later section. You can copy and paste the below code into your main.ts file:

main.ts

import { In } from 'typeorm';

import { assertNotNull } from '@subsquid/evm-processor';

import { TypeormDatabase } from '@subsquid/typeorm-store';

import * as erc20 from './abi/erc20';

import { Account, Transfer } from './model';

import {

Block,

contractAddress,

Log,

Transaction,

processor,

} from './processor';

// 1. Iterate through all selected blocks and look for transfer events,

// storing the relevant events in an array of transfer events

processor.run(new TypeormDatabase({ supportHotBlocks: true }), async (ctx) => {

let transfers: TransferEvent[] = [];

for (let block of ctx.blocks) {

for (let log of block.logs) {

if (

log.address === contractAddress &&

log.topics[0] === erc20.events.Transfer.topic

) {

transfers.push(getTransfer(ctx, log));

}

}

}

await processTransfers(ctx, transfers);

});

// 2. Define an interface to hold the data from the transfer events

interface TransferEvent {

id: string;

block: Block;

transaction: Transaction;

from: string;

to: string;

amount: bigint;

}

// 3. Extract and decode ERC-20 transfer event data from a log entry

function getTransfer(ctx: any, log: Log): TransferEvent {

let event = erc20.events.Transfer.decode(log);

let from = event.from.toLowerCase();

let to = event.to.toLowerCase();

let amount = event.value;

let transaction = assertNotNull(log.transaction, `Missing transaction`);

return {

id: log.id,

block: log.block,

transaction,

from,

to,

amount,

};

}

// 4. Enrich and insert data into typeorm database

async function processTransfers(ctx: any, transfersData: TransferEvent[]) {

let accountIds = new Set<string>();

for (let t of transfersData) {

accountIds.add(t.from);

accountIds.add(t.to);

}

let accounts = await ctx.store

.findBy(Account, { id: In([...accountIds]) })

.then((q: any[]) => new Map(q.map((i: any) => [i.id, i])));

let transfers: Transfer[] = [];

for (let t of transfersData) {

let { id, block, transaction, amount } = t;

let from = getAccount(accounts, t.from);

let to = getAccount(accounts, t.to);

transfers.push(

new Transfer({

id,

blockNumber: block.height,

timestamp: new Date(block.timestamp),

txHash: transaction.hash,

from,

to,

amount,

})

);

}

await ctx.store.upsert(Array.from(accounts.values()));

await ctx.store.insert(transfers);

}

// 5. Helper function to get account object

function getAccount(m: Map<string, Account>, id: string): Account {

let acc = m.get(id);

if (acc == null) {

acc = new Account();

acc.id = id;

m.set(id, acc);

}

return acc;

}

Now we've taken all of the steps necessary and are ready to run our indexer!

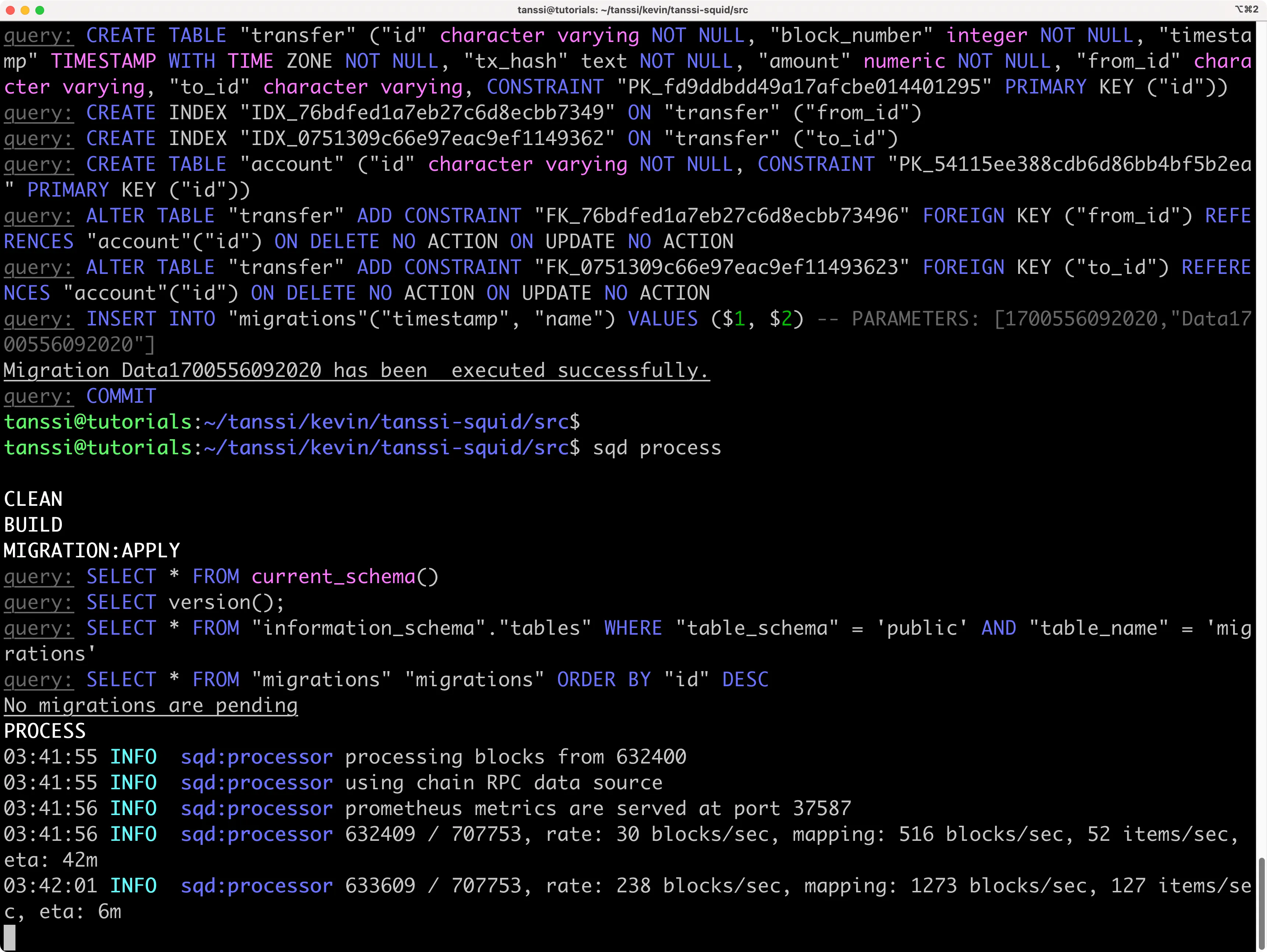

Run the Indexer¶

To run our indexer, we're going to run a series of sqd commands:

Build our project:

sqd build

Launch the database:

sqd up

Remove the database migration file that comes with the EVM template and generate a new one for our new database schema:

sqd migration:generate

sqd migration:apply

Launch the processor:

sqd process

In your terminal, you should see your indexer starting to process blocks!

Query Your Squid¶

To query your squid, open up a new terminal window within your project and run the following command:

sqd serve

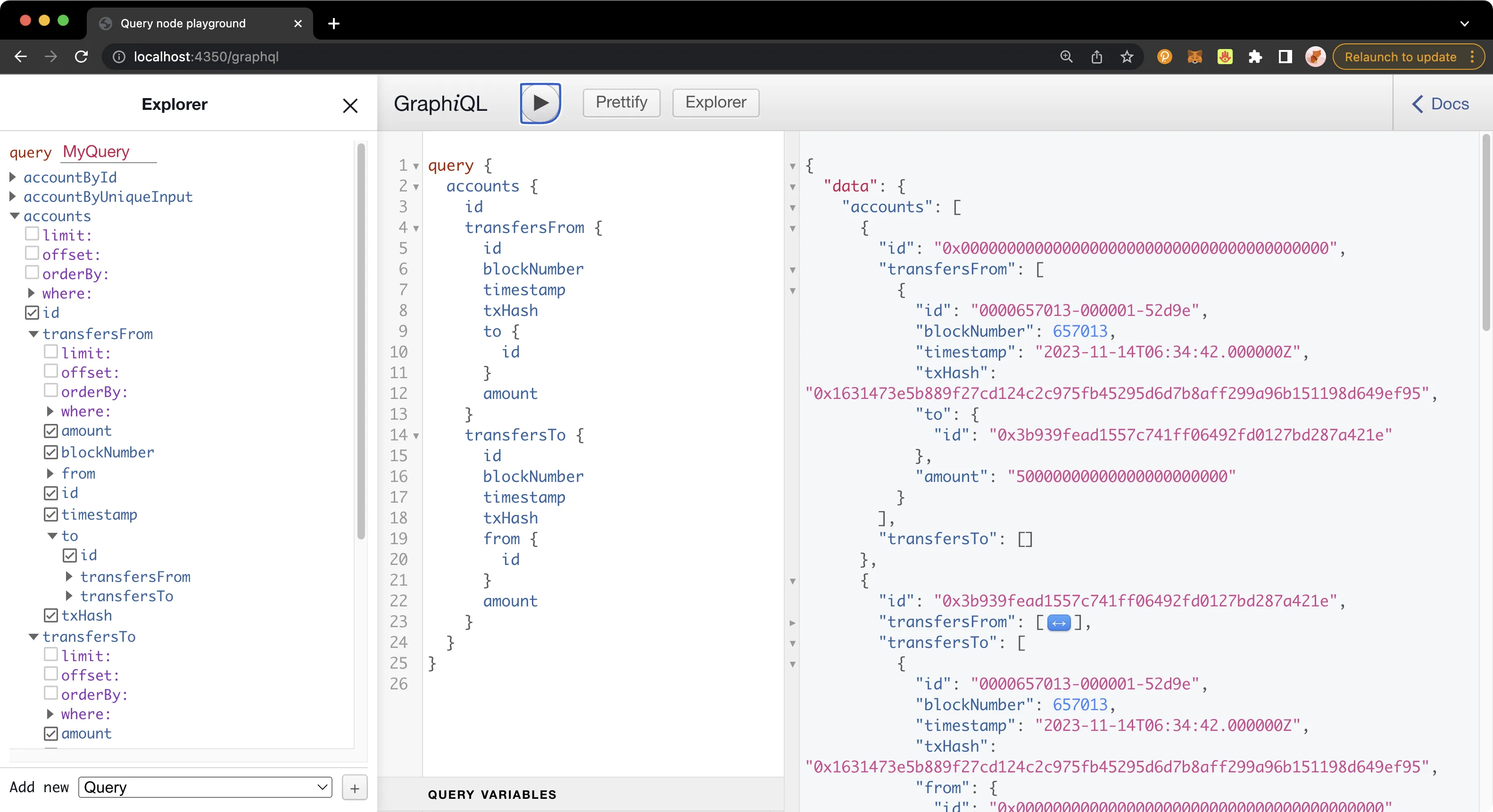

And that's it! You can now run queries against your Squid on the GraphQL playground at http://localhost:4350/graphql. Try crafting your own GraphQL query, or use the below one:

Example query

query {

accounts {

id

transfersFrom {

id

blockNumber

timestamp

txHash

to {

id

}

amount

}

transfersTo {

id

blockNumber

timestamp

txHash

from {

id

}

amount

}

}

}

Debug Your Squid¶

It may seem tricky at first to debug errors when building your Squid, but fortunately, there are several techniques you can use to streamline this process. First and foremost, if you're facing errors with your Squid, you should enable debug mode in your .env file by uncommenting the debug mode line. This will trigger much more verbose logging and will help you locate the source of the error.

# Uncommenting the below line enables debug mode

SQD_DEBUG=*

You can also add logging statements directly to your main.ts file to indicate specific parameters like block height and more. For example, see this version of main.ts, which has been enhanced with detailed logging:

main.ts

import { In } from 'typeorm';

import { assertNotNull } from '@subsquid/evm-processor';

import { TypeormDatabase } from '@subsquid/typeorm-store';

import * as erc20 from './abi/erc20';

import { Account, Transfer } from './model';

import {

Block,

contractAddress,

Log,

Transaction,

processor,

} from './processor';

processor.run(new TypeormDatabase({ supportHotBlocks: true }), async (ctx) => {

ctx.log.info('Processor started');

let transfers: TransferEvent[] = [];

ctx.log.info(`Processing ${ctx.blocks.length} blocks`);

for (let block of ctx.blocks) {

ctx.log.debug(`Processing block number ${block.header.height}`);

for (let log of block.logs) {

ctx.log.debug(`Processing log with address ${log.address}`);

if (

log.address === contractAddress &&

log.topics[0] === erc20.events.Transfer.topic

) {

ctx.log.info(`Transfer event found in block ${block.header.height}`);

transfers.push(getTransfer(ctx, log));

}

}

}

ctx.log.info(`Found ${transfers.length} transfers, processing...`);

await processTransfers(ctx, transfers);

ctx.log.info('Processor finished');

});

interface TransferEvent {

id: string;

block: Block;

transaction: Transaction;

from: string;

to: string;

amount: bigint;

}

function getTransfer(ctx: any, log: Log): TransferEvent {

let event = erc20.events.Transfer.decode(log);

let from = event.from.toLowerCase();

let to = event.to.toLowerCase();

let amount = event.value;

let transaction = assertNotNull(log.transaction, `Missing transaction`);

ctx.log.debug(

`Decoded transfer event: from ${from} to ${to} amount ${amount.toString()}`

);

return {

id: log.id,

block: log.block,

transaction,

from,

to,

amount,

};

}

async function processTransfers(ctx: any, transfersData: TransferEvent[]) {

ctx.log.info('Starting to process transfer data');

let accountIds = new Set<string>();

for (let t of transfersData) {

accountIds.add(t.from);

accountIds.add(t.to);

}

ctx.log.debug(`Fetching accounts for ${accountIds.size} addresses`);

let accounts = await ctx.store

.findBy(Account, { id: In([...accountIds]) })

.then((q: any[]) => new Map(q.map((i: any) => [i.id, i])));

ctx.log.info(

`Accounts fetched, processing ${transfersData.length} transfers`

);

let transfers: Transfer[] = [];

for (let t of transfersData) {

let { id, block, transaction, amount } = t;

let from = getAccount(accounts, t.from);

let to = getAccount(accounts, t.to);

transfers.push(

new Transfer({

id,

blockNumber: block.height,

timestamp: new Date(block.timestamp),

txHash: transaction.hash,

from,

to,

amount,

})

);

}

ctx.log.debug(`Upserting ${accounts.size} accounts`);

await ctx.store.upsert(Array.from(accounts.values()));

ctx.log.debug(`Inserting ${transfers.length} transfers`);

await ctx.store.insert(transfers);

ctx.log.info('Transfer data processing completed');

}

function getAccount(m: Map<string, Account>, id: string): Account {

let acc = m.get(id);

if (acc == null) {

acc = new Account();

acc.id = id;

m.set(id, acc);

}

return acc;

}

See the SQD guide to logging for more information on debug mode.

Common Errors¶

Below are some common errors you may face when building a project and how you can solve them.

Error response from daemon: driver failed programming external connectivity on endpoint my-awesome-squid-db-1

(49df671a7b0531abbb5dc5d2a4a3f5dc7e7505af89bf0ad1e5480bd1cdc61052):

Bind for 0.0.0.0:23798 failed: port is already allocated

This error indicates that you have another instance of SQD running somewhere else. You can stop that gracefully with the command sqd down or by pressing the Stop button next to the container in Docker Desktop.

Error: connect ECONNREFUSED 127.0.0.1:23798

at createConnectionError (node:net:1634:14)

at afterConnectMultiple (node:net:1664:40) {

errno: -61,code: 'ECONNREFUSED',syscall: 'connect',

address: '127.0.0.1',port: 23798}

To resolve this, run sqd up before you run sqd migration:generate

Is your Squid error-free, yet you aren't seeing any transfers detected? Make sure your log events are consistent and identical to the ones your processor is looking for. Your contract address also needs to be lowercase, which you can be assured of by defining it as follows:

export const contractAddress = '0x37822de108AFFdd5cDCFDaAa2E32756Da284DB85'.toLowerCase();

| Created: November 10, 2023